What exactly is dashboard UX design and how does it differ from regular dashboard development?

Dashboard UX design creates clean and clear interfaces that enable faster decision-making by focusing on what users actually need to accomplish their business-critical goals. Unlike regular development that often results in overwhelming collections of graphs, dials, and tables, UX design starts with understanding user workflows and cognitive capabilities. The process moves away from data-oriented design toward task-oriented design that clusters content and functions according to what people actually need to do, reducing cognitive workload while supporting both novice and expert users.

Tip: Evaluate your current dashboards by observing how long users spend interpreting data versus making decisions - the ratio should favor decision-making time.

Why do business dashboards often become overwhelming and how do you prevent this?

Dashboard overwhelm typically results from not really knowing what is and isn't important, leading to the inclusion of every available metric rather than focusing on actionable insights. This creates cognitive overload where users spend more time interpreting data than making decisions. Prevention requires understanding user domains, tasks, workflows, and the specific business context where decisions get made. The design process prioritizes information hierarchy, visual clarity, and progressive disclosure that shows users what they need when they need it.

Tip: Start dashboard design by identifying the top three decisions each user type needs to make, then design around those specific decision-making workflows.

How do you determine what information should be included in a dashboard design?

Information selection begins with understanding user segments, their roles, domain knowledge, and confidence levels with different data types. Through contextual inquiry and task analysis, we identify what challenges exist in current workflows, where things work well, and where they break down. The process involves capturing user personas, usage scenarios, and decision-making contexts that inform which metrics actually drive action versus those that are merely interesting. Information architecture follows user mental models rather than organizational structure.

Tip: Document the specific actions users take after viewing each piece of information - if no action follows, question whether that information belongs on the dashboard.

What's your approach to designing for different types of dashboard users?

Dashboard design requires understanding how user characteristics vary across domain knowledge, cognitive ability, physical ability, and accessibility needs. Different user types need different interaction architectures - executives might need high-level trend visualization while analysts require detailed filtering capabilities. The design balances process-oriented flows for guided analysis with data-oriented access for exploratory use. Multiple paths to goals accommodate both novice users who need guidance and expert users who want shortcuts and efficiency improvements.

Tip: Create user scenarios that include both typical use cases and edge cases like urgent decision-making or system failures to ensure your dashboard works under pressure.

How does the Experience Thinking framework apply to dashboard design?

Experience Thinking addresses dashboard design holistically across brand consistency (visual elements that reinforce organizational identity), content strategy (data governance and narrative structure), product functionality (interaction patterns and technical performance), and service support (training and ongoing optimization). This framework ensures dashboards integrate seamlessly with broader organizational systems rather than existing as isolated tools. The approach considers the complete user lifecycle from initial dashboard adoption through advanced usage and organizational change management.

Tip: Audit your dashboard design against all four Experience Thinking areas to identify gaps where disconnected elements might be limiting overall effectiveness and user adoption.

What role does accessibility play in dashboard UX design?

Accessibility in dashboard design ensures usability for people with different visual, cognitive, and motor abilities while improving the experience for all users. This includes appropriate color contrast for data visualization, sizing targets so users can easily interact with controls, and reducing distractions that interfere with data interpretation. Screen reader compatibility requires thoughtful data table structure and alternative text for visual elements. Accessibility also addresses cognitive load through clear navigation structures and consistent interaction patterns that build on existing user knowledge.

Tip: Test your dashboard designs with keyboard-only navigation and screen readers to identify usability barriers that also impact general user experience quality.

How do you balance simplicity with the need for detailed data access?

Effective dashboard design creates layered information architectures that provide immediate access to key insights while enabling drill-down into supporting details when needed. The approach uses progressive disclosure, contextual filtering, and smart defaults that reduce initial cognitive load while maintaining access to detailed analysis. Visual hierarchy guides attention to the most important information first, with secondary data available through intuitive interaction patterns. Foresight design approaches help anticipate future analytical needs while keeping current interfaces focused and actionable.

Tip: Implement the 5-second rule - users should understand the primary insights within 5 seconds, with detailed exploration available through clear interactive pathways.

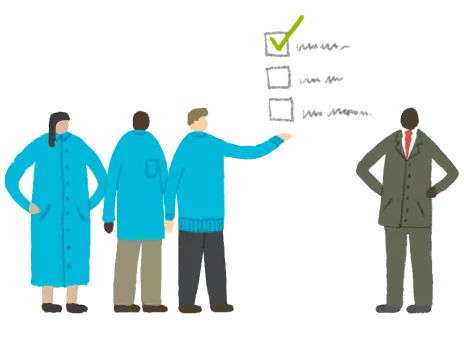

What user research methods do you use for dashboard design projects?

Dashboard research combines contextual inquiry, task analysis, and ethnographic observation to understand how people currently interact with data and make decisions. We use in-depth interviews and job shadowing to capture a day in the life of dashboard users, understanding where current workflows work well and where they break down. Card sorting helps organize information architecture that matches user mental models, while usability testing with wireframes and prototypes validates design concepts before development. The research provides both quantitative task metrics and qualitative insights about cognitive processes.

Tip: Observe users in their actual work environment rather than relying only on lab-based research, as context heavily influences how people interpret and act on data.

How do you identify the most important metrics and KPIs for dashboard users?

Metric identification starts with understanding business goals and user decision-making processes rather than available data sources. Through stakeholder interviews and workflow analysis, we identify what decisions users actually need to make and what information drives those decisions versus what's merely interesting to know. The process includes frequency analysis of different tasks, time-to-complete assessments, and identification of cognitive or material requirements for different analytical processes. Priority comes from understanding business impact and user task criticality rather than data availability.

Tip: Map each potential metric to specific decisions and actions users take - metrics that don't drive behavior shouldn't occupy prime dashboard real estate.

What's your approach to understanding user mental models for data interpretation?

Mental model research focuses on how users naturally categorize, associate, and interpret information in their domain context. We use open card sorting to understand how users group related concepts and label categories that make sense within their work environment. Cognitive task analysis examines complex analytical processes that engage memory, attention, judgment, and problem-solving capabilities. The research reveals terminology users naturally employ and conceptual relationships they expect to see in dashboard organization and navigation structure.

Tip: Pay attention to the language users naturally use when describing their work - dashboard labels should match their vocabulary rather than technical or organizational terminology.

How do you research the context and environment where dashboards will be used?

Environmental research examines physical workspace conditions, technology constraints, collaborative workflows, and situational factors that influence dashboard usage. This includes understanding device capabilities, screen sizes, lighting conditions, and whether dashboards are used individually or in group settings. We analyze interruption patterns, multitasking requirements, and urgency levels that affect how people consume information. The research also addresses organizational culture, decision-making hierarchies, and communication patterns that influence how insights get shared and acted upon.

Tip: Consider designing for worst-case scenarios like urgent decision-making, poor connectivity, or high-stress environments to ensure your dashboard remains usable when it matters most.

What techniques do you use to understand current dashboard pain points?

Pain point identification combines direct observation, user interviews, and analysis of current system usage patterns to understand where existing dashboards fail to support user needs. We document specific frustrations, workarounds, and places where users abandon analytical tasks or make decisions with incomplete information. The analysis includes both functional issues (slow loading, confusing navigation) and cognitive issues (information overload, unclear insights). Usage analytics help identify where people get stuck or spend disproportionate time without achieving their goals.

Tip: Document not just what users say is frustrating, but observe actual behavior patterns that reveal unconscious adaptations to poor dashboard design.

How do you validate user personas and usage scenarios for dashboard design?

Persona validation involves testing persona assumptions against real user behavior through additional field research, surveys, and usage scenario validation. We create 5-10 detailed usage scenarios that capture intended use across different contexts, then test these scenarios with actual users to identify gaps between assumed and actual behavior. Validation includes checking persona accuracy across different organizational levels, departments, and experience levels. The process ensures design decisions are based on verified user needs rather than assumptions or stakeholder opinions.

Tip: Test persona accuracy by having real users review persona descriptions and usage scenarios - they should recognize themselves and their colleagues in the documented patterns.

What's your approach to researching dashboard performance requirements?

Performance research examines both technical requirements (loading speed, data refresh rates, system reliability) and user performance requirements (decision-making speed, accuracy, confidence levels). We analyze task completion times, error rates, and cognitive load indicators across different dashboard design approaches. The research includes understanding acceptable wait times for different types of analysis and how performance limitations impact user workflows and decision quality. Foresight design methods help anticipate future performance needs as data volumes and user expectations evolve.

Tip: Establish performance baselines for critical user tasks before redesigning - improvements should be measurable in terms of both system response and user task completion efficiency.

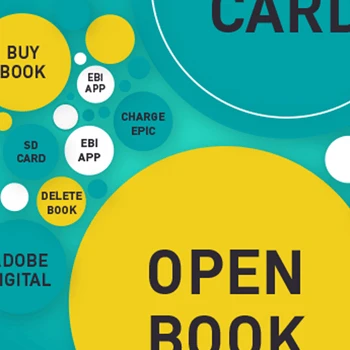

How do you structure information architecture for complex dashboards?

Dashboard information architecture starts with understanding how information chunks relate to each other and whether the structure supports quick finding and combining of related data. We research hierarchies and terminologies that users employ naturally, structuring content to match their knowledge and language skills rather than organizational data structures. The architecture supports users' information needs and provides content where they expect to find it, using card sorting and reverse card sorting to validate organizational approaches before visual design begins.

Tip: Structure dashboard navigation around user tasks and decision flows rather than data source organization or departmental boundaries.

What's your approach to organizing metrics and data visualizations?

Metric organization follows user mental models and task priorities rather than technical data relationships. We group related metrics according to decision-making contexts and create logical pathways between overview and detailed views. The organization considers frequency of access, importance levels, and logical relationships that users understand intuitively. Visual grouping, progressive disclosure, and contextual filtering help users navigate from high-level insights to supporting details without losing context or requiring complex navigation structures.

Tip: Organize metrics by the decisions they inform rather than by data source, department, or alphabetical order to support natural analytical workflows.

How do you handle navigation design for multi-level dashboard systems?

Multi-level navigation requires clear wayfinding that helps users understand where they are, where they came from, and where they can go next within the analytical journey. We design navigation structures that support both guided exploration for novice users and efficient shortcuts for expert users. The approach includes breadcrumb systems, contextual menus, and consistent interaction patterns that work across different levels of data granularity. Navigation also supports comparison workflows and bookmark functionality for frequently accessed analytical views.

Tip: Implement persistent context indicators that show users their current location within the data hierarchy and provide quick access back to higher-level overviews.

What's your approach to designing dashboard filtering and search capabilities?

Filter and search design focuses on matching user mental models for data exploration while providing both guided and open-ended analytical pathways. We design filter systems that support common analytical workflows through smart defaults and suggested filter combinations. Search capabilities accommodate both specific data lookup and exploratory discovery patterns. The design includes filter state management, saved searches, and filter combination suggestions that help users navigate complex data sets efficiently while avoiding confusion about current view context.

Tip: Design filters that show preview results or impact indicators before users apply them, helping them understand the scope of data changes without trial-and-error exploration.

How do you design information hierarchy for different user expertise levels?

Information hierarchy design accommodates varying domain knowledge and system confidence levels by providing multiple entry points and exploration paths. Expert users get direct access to detailed controls and data while novice users receive guided workflows and contextual help. The hierarchy uses progressive disclosure to prevent cognitive overload while maintaining access to advanced functionality. Smart defaults reduce initial complexity while providing customization options that match user experience growth over time.

Tip: Create role-based dashboard views that can evolve with user expertise rather than forcing all users through the same interface regardless of their knowledge level.

What's your approach to designing cross-dashboard integration and consistency?

Cross-dashboard integration requires establishing consistent navigation patterns, interaction behaviors, and information architectures that work across different analytical contexts while respecting domain-specific needs. We develop shared design patterns and terminology standards that users can learn once and apply broadly. Integration also addresses data consistency, shared filtering contexts, and seamless transitions between different analytical tools. The approach includes governance frameworks for maintaining consistency as dashboard systems evolve and expand.

Tip: Establish a shared component library and interaction pattern guide early in multi-dashboard projects to ensure consistency without stifling domain-specific optimization.

How do you address content strategy for dashboard data presentation?

Content strategy for dashboards addresses how data tells coherent stories that support decision-making rather than just displaying available metrics. We develop approaches to data narrative structure, contextual explanation, and progressive information disclosure that help users understand not just what the data shows but what it means for their decisions. Content strategy includes governance for data labeling, description consistency, and update communication that maintains user confidence in data accuracy and relevance over time. Foresight design helps anticipate how content needs will evolve.

Tip: Include brief contextual explanations or tooltips for key metrics that help users understand what constitutes good performance rather than just showing numbers without context.

What's your approach to data visualization design for dashboards?

Data visualization design focuses on creating visual elements that enhance comprehension and decision-making rather than just displaying data attractively. We select visualization types based on the specific analytical tasks users need to perform, considering cognitive load, comparison requirements, and pattern recognition needs. The design process includes color palette selection for appropriate contrast, typography that supports readability across different screen sizes, and visual hierarchy that guides attention to the most important insights first. Accessibility considerations ensure visualizations work for users with different visual capabilities.

Tip: Choose visualization types based on the specific comparisons or patterns users need to identify rather than defaulting to familiar chart types that may not support analytical goals.

How do you ensure dashboard designs support both overview and detailed analysis?

Dual-purpose design creates visual frameworks that provide immediate insight at the overview level while supporting drill-down exploration without losing context. We use visual hierarchy, progressive disclosure, and interaction design that maintains spatial relationships between overview and detail views. The approach includes contextual zoom, filtering that preserves spatial orientation, and breadcrumb systems that help users navigate back to higher-level perspectives. Visual consistency ensures users can understand detailed views as extensions of overview insights rather than disconnected interfaces.

Tip: Design overview and detail views as part of the same visual system so users can intuitively understand how detailed data relates back to high-level insights.

What's your approach to color and typography in dashboard design?

Color and typography decisions prioritize functional communication over aesthetic preferences, ensuring appropriate contrast ratios for accessibility while supporting intuitive data interpretation. Color schemes accommodate different types of color vision and work effectively in various lighting conditions. Typography hierarchy supports scanning behaviors and rapid information processing while maintaining readability at different screen sizes and viewing distances. The visual framework creates consistency across different types of content while maintaining flexibility for domain-specific needs and brand expression.

Tip: Test color schemes with actual data ranges and edge cases to ensure the visual system remains clear and meaningful across all possible data states.

How do you design dashboard layouts that minimize cognitive load?

Layout design reduces cognitive workload through logical grouping, consistent spatial relationships, and progressive information disclosure that matches natural eye-scanning patterns. We minimize distractions that can be confused with important data, size interactive elements appropriately for easy targeting, and structure layouts so users can efficiently complete analytical tasks. The design includes white space management, visual grouping principles, and attention guidance that helps users process information efficiently without feeling overwhelmed by options or competing elements.

Tip: Use the 'squint test' - when you squint at your dashboard, the most important information should still be clearly visible while less critical elements fade into the background.

What's your approach to responsive design for dashboards across different devices?

Responsive dashboard design addresses how analytical workflows adapt across desktop, tablet, and mobile contexts while maintaining functional effectiveness. We prioritize critical information for smaller screens while ensuring mobile interfaces support essential decision-making tasks rather than just displaying desktop layouts poorly. The design includes touch-friendly interactions, appropriate information density, and context-aware functionality that recognizes when users might be accessing dashboards remotely or in different situational contexts that influence their analytical needs.

Tip: Design mobile dashboard experiences around key alerts and decision points rather than trying to replicate full desktop analytical capabilities on smaller screens.

How do you create visual frameworks that scale across multiple dashboard types?

Scalable visual frameworks establish consistent design patterns, component libraries, and interaction models that work across different analytical contexts while allowing domain-specific optimization. We develop reusable visual components for common dashboard elements like charts, filters, and navigation while providing guidelines for adapting these elements to specific use cases. The framework includes governance for visual consistency, component evolution, and brand expression that maintains user familiarity while supporting organizational growth and changing analytical needs.

Tip: Create a component library that includes both specific implementations and adaptation guidelines so different dashboard projects can maintain consistency while addressing unique requirements.

What's your approach to designing error states and loading experiences?

Error and loading state design ensures users maintain confidence in dashboard reliability while providing clear guidance for resolving issues or understanding system status. We design loading indicators that provide appropriate feedback about progress and expected completion time, error messages that include recovery instructions, and graceful degradation when data sources become unavailable. The approach includes preventing potential errors through smart defaults and validation, while helping users understand system limitations and alternative analytical approaches when problems occur.

Tip: Design error states that provide specific next steps rather than generic error messages, helping users either resolve issues themselves or provide useful information to technical support.

What usability testing methods do you use for dashboard validation?

Dashboard usability testing combines task-based evaluation with think-aloud protocols to understand both effectiveness (goal achievement) and efficiency (ease of task completion) while capturing user satisfaction and confidence levels. We conduct testing at multiple stages from early wireframes through coded prototypes, using representative end-users performing realistic analytical tasks. Testing includes both formative evaluation that informs design iteration and summative evaluation that validates final design against performance criteria. The approach addresses both individual task completion and workflow integration across multiple dashboard sessions.

Tip: Test dashboard designs with realistic data volumes and complexity rather than clean sample data to identify performance and usability issues that only emerge under real-world conditions.

How do you test dashboard designs with different types of users?

Multi-user testing addresses how different expertise levels, roles, and usage contexts influence dashboard effectiveness. We test with novice users who need guidance, expert users who want efficiency, and occasional users who need clarity and memorability. Testing scenarios include both routine analytical tasks and edge cases like urgent decision-making or system failures. The approach validates that design solutions work across the full spectrum of intended users rather than optimizing for a single user type at the expense of others.

Tip: Include testing scenarios that simulate high-pressure or time-constrained situations where users need to extract insights quickly, as these often reveal interface weaknesses not apparent in calm testing conditions.

What's your approach to testing dashboard performance and technical usability?

Performance testing examines how technical limitations impact user experience, including loading times, data refresh rates, and system responsiveness during complex analytical operations. We test across different network conditions, device capabilities, and concurrent user scenarios to understand real-world performance constraints. The testing includes user tolerance levels for different types of delays and how performance issues influence analytical workflows and decision quality. Testing also addresses data accuracy perception and user confidence in system reliability.

Tip: Establish performance baselines for critical user tasks and test how performance degradation impacts task completion rates and user confidence in dashboard insights.

How do you validate information architecture and navigation design?

Information architecture testing uses card sorting validation, first-click testing, and navigation pathway analysis to ensure users can find information efficiently and understand organizational logic. We test both directed tasks (finding specific information) and exploratory tasks (discovering insights through browsing) to validate that the architecture supports different analytical approaches. Testing includes reverse card sorting to validate category labels and tree testing to evaluate navigation structure before visual design implementation.

Tip: Test navigation with users who haven't seen the dashboard before - if they can't find key information within their first few clicks, the information architecture likely needs revision.

What's your approach to testing data visualization effectiveness?

Visualization testing evaluates how well different chart types, color schemes, and layout approaches support accurate data interpretation and insight generation. We test users' ability to identify trends, make comparisons, and extract actionable insights from different visualization approaches. Testing includes accuracy assessments (do users interpret data correctly), efficiency measurements (how quickly do they reach insights), and preference evaluation (which approaches feel most intuitive). The process validates that visualizations support intended analytical tasks rather than just looking visually appealing.

Tip: Test data visualization with edge cases like missing data, extreme values, or unusual patterns to ensure the visual design remains clear and interpretable under all conditions.

How do you test dashboard accessibility and inclusive design?

Accessibility testing ensures dashboard designs work effectively for users with different visual, cognitive, and motor capabilities while improving usability for all users. We test with assistive technologies like screen readers, keyboard-only navigation, and voice control systems to identify barriers to access. Testing includes color contrast validation, focus management assessment, and alternative format evaluation. The process also addresses cognitive accessibility through task complexity evaluation and clear instruction testing that helps users with different cognitive processing capabilities.

Tip: Include accessibility testing early in the design process rather than as a final check - many accessibility improvements also enhance general usability and are easier to implement during initial design.

What's your approach to longitudinal testing of dashboard usage patterns?

Longitudinal testing examines how dashboard usage evolves over time as users develop expertise, organizational needs change, and new analytical requirements emerge. We track user behavior patterns, feature adoption rates, and performance improvements across multiple sessions and extended time periods. The approach includes identifying when users outgrow initial design assumptions and need more advanced capabilities. Foresight design methods help anticipate future testing needs and user evolution patterns that inform iterative dashboard optimization.

Tip: Plan for follow-up testing sessions 3-6 months after dashboard deployment to identify how usage patterns change and what new user needs emerge with experience.

What deliverables do you provide for dashboard development implementation?

Implementation deliverables include annotated wireframes that clearly show content and function flow from a user perspective, design style guides that capture visual frameworks and specific data visualization specifications, and design pattern libraries for reusable dashboard components. We provide detailed interaction specifications, responsive design guidelines, and accessibility requirements documentation. The deliverables are designed to maintain design intent integrity from design phase to product launch, preventing quality reduction through misinterpretation during development.

Tip: Ensure implementation documentation includes specific examples of edge cases and error states rather than just happy path scenarios to prevent development gaps.

How do you support development teams during dashboard implementation?

Development support includes ongoing design consultation throughout construction phases, providing additional visual elements like icons and customized design patterns as needed. We conduct regular design reviews to maintain intended quality levels and support code testing activities. The process includes user experience testing during development phases to ensure technical implementation doesn't compromise user experience quality. Support addresses both technical design questions and user experience validation as the dashboard takes functional form.

Tip: Establish regular design review checkpoints during development rather than waiting until completion to identify and address implementation issues that could impact user experience.

What's your approach to creating design systems for dashboard projects?

Design systems for dashboards establish consistent visual and interaction patterns across multiple analytical interfaces while providing flexibility for domain-specific needs. We create component libraries that include data visualization standards, interaction patterns, color systems, and typography guidelines specifically optimized for analytical interfaces. The system includes governance frameworks for component evolution, brand expression guidelines, and usage documentation that enables distributed development while maintaining experience consistency and quality standards.

Tip: Build design systems that include data visualization components and interaction patterns specific to analytical interfaces rather than adapting general web design systems that may not address dashboard-specific needs.

How do you handle responsive design implementation for complex dashboards?

Responsive implementation requires careful planning of content prioritization, interaction adaptation, and performance optimization across different device contexts. We provide detailed responsive specifications that address how complex visualizations adapt to smaller screens, how filtering and navigation work on touch interfaces, and how critical information remains accessible across all device types. Implementation includes testing across different screen sizes, input methods, and usage contexts to ensure functionality is preserved rather than just visual layout adaptation.

Tip: Define mobile dashboard functionality based on critical user tasks rather than trying to replicate all desktop capabilities, focusing on key alerts and essential decision-making information.

What's your approach to data visualization implementation guidelines?

Data visualization implementation requires detailed specifications for chart types, color usage, animation behaviors, and interaction states that maintain clarity and accuracy across all data conditions. We provide guidelines for handling edge cases like missing data, extreme values, and performance limitations that affect visualization rendering. Implementation documentation includes accessibility requirements for charts, alternative text specifications, and data table alternatives that ensure visualizations work for users with different capabilities and assistive technologies.

Tip: Provide developers with comprehensive test datasets that include edge cases, extreme values, and missing data to ensure visualization implementations handle all real-world data conditions gracefully.

How do you ensure design quality during agile development processes?

Agile design quality maintenance requires establishing design standards early, creating rapid review processes, and maintaining designer involvement throughout sprint cycles. We develop design review criteria that can be applied quickly during development sprints while ensuring core user experience principles are preserved. The approach includes design debt management, component consistency monitoring, and user experience regression testing that prevents quality erosion as features are added incrementally over time.

Tip: Create design quality checklists that development teams can use for self-review before design handoffs, focusing on common issues like contrast ratios, interaction states, and mobile responsiveness.

What's your approach to performance optimization for dashboard user experience?

Performance optimization balances technical efficiency with user experience quality, ensuring dashboards remain responsive during complex analytical operations while maintaining visual clarity and interaction responsiveness. We provide guidelines for progressive loading, data chunking, and caching strategies that minimize perceived wait times. Optimization includes designing loading states that provide useful feedback and graceful degradation approaches when performance limitations are encountered. Foresight design helps anticipate future performance requirements as data volumes grow.

Tip: Optimize for perceived performance by providing immediate feedback and progressive disclosure rather than just focusing on technical loading times that may not reflect actual user experience quality.

How do you measure the success of dashboard UX design implementations?

Success measurement combines user task performance metrics (completion rates, error rates, time-to-insight) with user satisfaction assessments and business impact evaluation. We track effectiveness through goal achievement rates, efficiency through task completion times and error reduction, and satisfaction through user confidence levels and continued usage patterns. Success metrics also include adoption rates, feature utilization, and decision-making improvement indicators that demonstrate business value. The measurement framework addresses both immediate usability improvements and long-term user experience evolution.

Tip: Establish baseline metrics for key user tasks before dashboard redesign to demonstrate clear improvement and identify areas where design changes have the greatest impact on user performance.

What analytics do you recommend for ongoing dashboard optimization?

Dashboard analytics should track user behavior patterns, feature utilization, performance bottlenecks, and task completion pathways that inform iterative design improvements. We recommend monitoring click-through patterns, time spent in different dashboard sections, abandonment points, and successful task completion flows. Analytics also include error tracking, support request patterns, and user feedback collection that identify usability issues requiring design attention. The approach balances quantitative usage data with qualitative user experience insights.

Tip: Focus analytics on user journey completion rather than just page views or clicks to understand how well your dashboard supports end-to-end analytical workflows and decision-making processes.

How do you optimize dashboards based on user feedback and usage data?

Optimization requires systematic analysis of user feedback patterns, usage analytics, and performance data to identify specific improvement opportunities. We prioritize changes based on impact on user task completion, frequency of issues, and business value of improvements. Optimization includes A/B testing of design alternatives, iterative refinement of information architecture, and continuous improvement of visualization effectiveness. The process includes user validation of proposed changes before implementation to ensure improvements address real user needs rather than assumptions.

Tip: Create feedback loops that connect user behavior data with specific design elements so you can identify which interface changes will have the most significant impact on user experience quality.

What's your approach to testing dashboard design alternatives?

Alternative testing uses controlled comparison methods to evaluate different design approaches for specific user tasks and contexts. We test different visualization types, layout alternatives, and interaction patterns to identify optimal solutions for user goals. Testing includes both quantitative performance measures and qualitative preference assessment that reveals user mental models and expectations. The approach validates design decisions through evidence rather than opinion, ensuring optimization efforts focus on changes that genuinely improve user experience and task performance.

Tip: Test design alternatives with realistic user tasks and data rather than artificial scenarios to ensure test results reflect actual performance improvements users will experience.

How do you handle dashboard optimization for evolving business requirements?

Evolving requirements demand flexible design systems that can accommodate new metrics, changing analytical workflows, and modified decision-making processes without fundamental interface reconstruction. We create design frameworks that support addition of new data sources, modification of existing visualizations, and adaptation to new user roles or business contexts. The approach includes change impact assessment, user communication strategies, and training support that helps users adapt to dashboard evolution while maintaining productivity and confidence.

Tip: Build dashboard architectures that can evolve incrementally rather than requiring complete redesigns when business requirements change, focusing on modular components that can be reconfigured rather than replaced.

What role does AI play in dashboard UX design and optimization?

AI enhances dashboard design through pattern recognition in user behavior, automated insight generation, and personalization of analytical interfaces based on individual usage patterns. AI applications include intelligent data filtering, anomaly detection that highlights important changes, and predictive analytics that anticipate user information needs. However, AI implementations must maintain user control and transparency about how automated features work. The design challenge involves making AI assistance helpful without creating over-reliance or reducing user analytical thinking capabilities.

Tip: Implement AI features that augment human analytical capabilities rather than replacing user decision-making, ensuring users understand how AI recommendations are generated and can override automated suggestions when needed.

How do you ensure long-term dashboard usability and user adoption?

Long-term usability requires ongoing user research, systematic design maintenance, and proactive adaptation to changing user needs and technological capabilities. We establish governance frameworks for design consistency, regular usability assessment schedules, and user feedback collection systems that identify emerging usability issues before they impact user adoption. The approach includes training program development, change management support, and continuous improvement processes that maintain dashboard effectiveness as users develop expertise and organizational needs evolve over time.

Tip: Plan for regular design health assessments every 6-12 months to identify usability debt, changing user needs, and opportunities for optimization before problems impact user satisfaction and adoption rates.